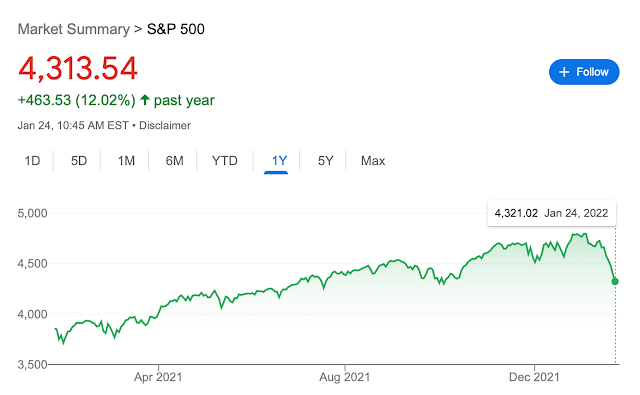

Torsten Slok, chief economist at Apollo Global Management, passes along the above gorgeous graph. Fed forecasts of interest rates behave similarly. So does the "market forecast" embedded in the yield curve, which usually slopes upward.

Torsten's conclusion:

The forecasting track record of the economics profession when it comes to 10-year interest rates is not particularly impressive, see chart [above]. Since the Philadelphia Fed started their Survey of Professional Forecasters twenty years ago, the economists and strategists participating have been systematically wrong, predicting that long rates would move higher. Their latest release has the same prediction.

Well. Like the famous broken clock that is right twice a day, note the forecasts are "right" in times of higher rates. So don't necessarily run out and buy bonds today.

Can it possibly be true that professional forecasters are simply behaviorally dumb, refuse to learn, and the institutions that hire them refuse to hire more rational ones?

My favorite alternative (which, I admit, I've advanced a few times on this blog): When a survey asks people "what do you 'expect'?" people do not answer with the true-measure conditional mean. By giving an answer pretty close to the actual yield curve, these forecasters are reporting a number close to the risk-neutral conditional mean, i.e. not \(\sum_s\pi(s)x(s)\) but \(\sum_s u'[c(s)]\pi(s)x(s)\). The risk-neutral mean is a better sufficient statistic for decisions. Pay attention not only to how likely events are but how painful it is to lose money in those events. Don't be wrong on the day the firm loses a lot of money. The weather service also tends to overstate wind forecasts. I interpret the forecast of 30 mph winds as "if you go out and capsize your boat in a 30 mph gust, don't blame us." "If you buy bonds and they tank, don't blame us" surely has to be part of a finance industry "forecast."

If the risk-neutral mean equals the market price, do nothing. And do nothing has to be the advice to the average investor. Reporting the forecast implied by the yield curve directly has a certain logic to it.

Economists rush too quickly, I think, from surveys where people are asked "what do you expect?" to bemoaning that the answers do not represent true-measure conditional means, and blaming that on stupidity. As if anyone who answers the question has the vaguest idea what the definitions of mean, median, mode, conditional and true vs. risk-neutral measure are. We might have a bit more humility: They're giving us a sensible answer, to a question posed in English, but since we asked the question in a foreign language, it is an answer to a different question.

(Teaching is good for you. Most of my students did not understand that "risk" can mean you earn more money than you "expected," at the beginning of the class. I hope they got it by the end! But they were not wrong. In English, "risk" means downside risk. In portfolio analysis, it means variance. Know what words mean.)

But that also means, do not interpret the answers as true-measure conditional means!

This graph is particularly challenging since it concerns the 10 year rate only. Similar graphs of short-term rates also show consistent bias toward forecasting higher rates that don't happen. But that is more excusable as a risk-neutral mean, since the yield curve slopes up in the first few years. A rising forecast of 10 year yields corresponds to slope from 10 years to longer maturities, which is typically smaller. Torsten, if you're listening, a comparison to the forecast implied by the forward curve at each date would be really interesting!

***

Update: The same students who used the English meaning of "risk" to be "downside risk" also used the English meaning of "expected" to be "what happens if nothing goes wrong," somewhere in the 90th percentile. The two meanings do make some sense, properly understood, especially in a world with skewed distributions -- the lion gets you or does not, the plan crashes or does not, the bomb explodes or does not. Many important distributions are not normal, so mean and variance aren't appropriate!

I don't mean to say that surveys are useless. They are very important data, that can be very useful for forecasting events. If the survey forecast points up, that tells you something. A forecasting regression that includes survey data can be very useful. Just don't interpret it directly as conditional mean and blather about irrationality if it isn't.

The variation across people in survey forecasts is the really interesting and under-studied part of all this. The graph is the average forecast across forecasters. But individual forecasters say wildly different things. Even these, who are professional forecasters. Why do survey forecasts vary across people so much, though the people have the same information? Why do trading positions not vary over time or across people anything like the difference between survey forecasts and market prices says they should? I think we're missing the interesting part of surveys -- their heterogeneity, with little heterogeneity of actions.